(CLO) OpenAI's speech-to-text tool Whisper is advertised as being "near-human-level robust and accurate," but has one major drawback: It's prone to fabricating text snippets or even entire sentences!

Some of the texts it fabricates, known in the industry as hallucinatory, can include racial commentary, violence and even imaginary medical treatments, experts say.

Experts say such fabrications are serious because Whisper is used in many industries around the world to translate and transcribe interviews, generate text and subtitle videos.

More worryingly, medical centers are using Whisper-based tools to record patient-doctor consultations, despite OpenAI's warning that the tool should not be used in "high-risk areas."

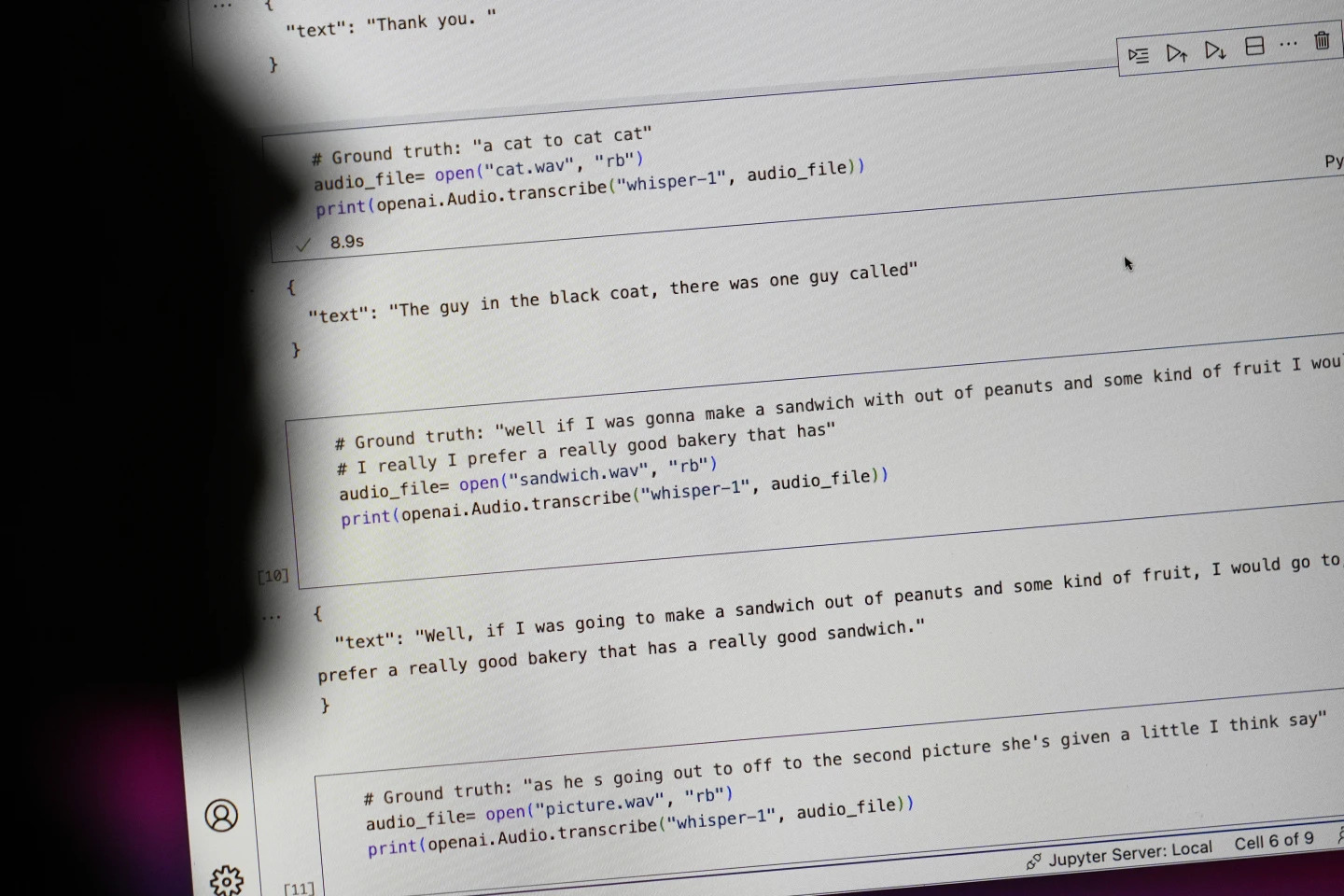

Sentences starting with "#Ground truth" are what was actually said, sentences starting with "#text" are what Whisper transcribed. Photo: AP

Researchers and engineers say Whisper frequently produces hallucinations during use. For example, a University of Michigan researcher said he found hallucinations in eight out of 10 recordings he examined.

An early machine learning engineer found manipulation in about half of the more than 100 hours of Whisper transcripts he analyzed. A third developer said he found hallucinations in nearly every one of the 26,000 transcripts created with Whisper.

The illusion persists even in short, well-recorded audio samples. A recent study by computer scientists found 187 distortions in more than 13,000 clear audio clips they examined.

That trend would lead to tens of thousands of errors across millions of recordings, the researchers said.

Such mistakes can have “really serious consequences,” especially in a hospital setting, said Alondra Nelson, a professor in the School of Social Sciences at the Institute for Advanced Study.

“Nobody wants to be misdiagnosed. There needs to be a higher barrier,” said Nelson.

Professors Allison Koenecke of Cornell University and Mona Sloane of the University of Virginia examined thousands of short excerpts they retrieved from TalkBank, a research archive housed at Carnegie Mellon University. They determined that nearly 40% of the hallucinations were harmful or disturbing because the speaker could be misunderstood or misrepresented.

A speaker in one recording described "two other girls and a woman", but Whisper fabricated additional racial commentary, adding "two other girls and a woman, um, who was black".

In another transcription, Whisper invented a non-existent drug called "antibiotics with increased activity".

While most developers acknowledge that transcription tools can make misspellings or other errors, engineers and researchers say they've never seen an AI-powered transcription tool as hallucinogenic as Whisper.

The tool is integrated into several versions of OpenAI's flagship chatbot, ChatGPT, and is an integrated service in Oracle and Microsoft's cloud computing platform, serving thousands of companies worldwide. It is also used to transcribe and translate text into many languages.

Ngoc Anh (according to AP)

Source: https://www.congluan.vn/cong-cu-chuyen-giong-noi-thanh-van-ban-ai-cung-co-the-xuyen-tac-post319008.html

![[Photo] General Secretary To Lam attends the ceremony to celebrate the 80th anniversary of the post and telecommunications sector and the 66th anniversary of the science and technology sector.](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/29/8e86b39b8fe44121a2b14a031f4cef46)

![[Photo] Many streets in Hanoi were flooded due to the effects of storm Bualoi](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/29/18b658aa0fa2495c927ade4bbe0096df)

![[Photo] General Secretary To Lam receives US Ambassador to Vietnam Marc Knapper](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/29/c8fd0761aa184da7814aee57d87c49b3)

![[Photo] National Assembly Chairman Tran Thanh Man chairs the 8th Conference of full-time National Assembly deputies](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/29/2c21459bc38d44ffaacd679ab9a0477c)

Comment (0)