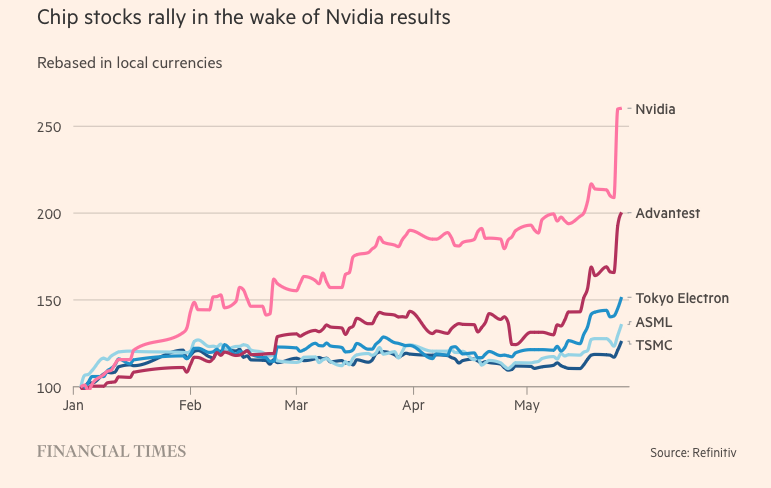

Nvidia was once known as a maker of chips used in video games, but has shifted its focus to the data center market in recent years.

The US chip company has been thriving during the pandemic, as demand for gaming and cloud applications has surged and a cryptocurrency mining craze has spread around the world . By the end of the fiscal year ending January 29, the data center chip business accounted for more than 50% of the company's revenue.

Meanwhile, the popular chatbot ChatGPT took generative artificial intelligence (AI) to the next level this year, using large amounts of existing data to create new content on topics ranging from poetry to computer programming.

Microsoft and Alphabet, two tech giants, are also big players in the AI space, believing that generative technology can change the way people work. Both have launched a race to integrate AI into search engines as well as office software with the ambition to dominate this industry.

Goldman Sachs estimates that US investment in AI could be approximately 1% of the country's economic output by 2030.

Supercomputers used to process data and run generative AI rely on graphics processing units (GPUs). GPUs are designed to handle specific calculations related to AI computing, much more efficiently than central processing units from other chipmakers like Intel. For example, OpenAI’s ChatGPT is powered by thousands of Nvidia GPUs.

Meanwhile, Nvidia has about 80% of the GPU market share. Nvidia's main competitors include Advanced Micro Devices and in-house AI chips from tech companies like Amazon, Google and Meta Platforms.

The secret to sublimation

The company’s leap forward was made possible by the H100, a chip based on Nvidia’s new architecture called “Hopper” – named after American programming pioneer Grace Hopper. The explosion of artificial intelligence has made the H100 the hottest commodity in Silicon Valley.

The super-sized chips used in data centers have 80 billion transistors, five times the silicon that powers the latest iPhones. Although they cost twice as much as their predecessor, the A100 (released in 2020), H100 users say they offer three times the performance.

The H100 has proven particularly popular with “Big Tech” companies like Microsoft and Amazon, which are building entire data centers focused on AI workloads, and new-generation AI startups like OpenAI, Anthropic, Stability AI, and Inflection AI, as it promises higher performance that can speed up product launches or reduce training costs over time.

“This is one of the most scarce engineering resources out there,” said Brannin McBee, chief strategy officer and founder of CoreWeave, an AI-based cloud startup that was one of the first companies to receive H100 shipments earlier this year.

Other customers aren’t as lucky as CoreWeave, having to wait up to six months to get the product to train their massive data models. Many AI startups are worried that Nvidia won’t be able to meet market demand.

Elon Musk also ordered thousands of Nvidia chips for his AI startup, saying “GPUs are harder to come by than drugs right now.”

“Computing costs have skyrocketed. The minimum amount of money spent on server hardware used to build creative AI has reached $250 million,” the Tesla CEO shared.

While the H100 is timely, Nvidia’s AI breakthrough dates back two decades to software innovation rather than hardware. In 2006, the company introduced Cuda software, which allows GPUs to be used as accelerators for tasks other than graphics.

“Nvidia saw the future before everyone else and pivoted to programmable GPUs. They saw the opportunity, bet big, and consistently outpaced their competitors,” said Nathan Benaich, a partner at Air Street Capital and an investor in AI startups .

(According to Reuters, FT)

Source

![[Photo] Hanoi morning of October 1: Prolonged flooding, people wade to work](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/10/1/189be28938e3493fa26b2938efa2059e)

![[Photo] Panorama of the cable-stayed bridge, the final bottleneck of the Ben Luc-Long Thanh expressway](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/9/30/391fdf21025541d6b2f092e49a17243f)

Comment (0)