On September 23, more than 200 former heads of state, diplomats , Nobel Prize-winning scientists and many artificial intelligence (AI) experts signed an initiative calling for the creation of a "global red line" for AI.

The initiative, led by the French Center for AI Safety (CeSIA), The Future Society and UC Berkeley, calls on governments to reach an international agreement on absolute limits that AI must never exceed by the end of 2026.

Signatories include scientist Geoffrey Hinton, OpenAI co-founder Wojciech Zaremba, Anthropic Chief Information Security Officer Jason Clinton, and many other AI leaders.

“If countries cannot agree on what they want to do with AI, they must at least agree on what AI absolutely cannot do,” stressed Mr. Charbel-Raphaël Segerie, CEO of CeSIA.

Some areas have set limits, such as the European Union’s AI Act or the US-China agreement that nuclear weapons must always be under human control. However, there is no global consensus yet.

According to Niki Iliadis (The Future Society), voluntary commitments by businesses are “not binding enough”. She calls for an independent, authoritative international organization to define and monitor “red lines”.

Professor Stuart Russell (UC Berkeley) believes that the AI industry needs to choose a safe technological path from the beginning, just like the nuclear energy industry only builds plants after finding a way to control risks. He asserts that setting “red lines” does not hinder economic development, but on the contrary helps AI serve humans safely and sustainably.

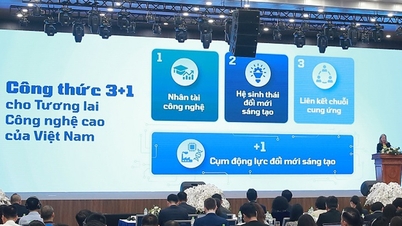

Previously, at a workshop on AI strategy on September 11, Associate Professor Dr. Nguyen Quan, former Minister of Science and Technology, pointed out the dark sides of using AI and called for Vietnam to prioritize AI ethics when building related laws and regulations.

From the perspective of a state management agency, Dr. Ho Duc Thang, Director of the National Institute of Digital Technology and Digital Transformation (Ministry of Science and Technology) said that the AI Law will be based on a number of principles to ensure a balance between management and promoting development.

Human-centered law requires AI to be under permanent human control. Humans must be responsible, AI cannot make decisions on its own. For example, self-driving cars must have human supervision.

Source: https://vietnamnet.vn/hon-200-leaders-and-specialists-keu-goi-lan-ranh-do-cho-ai-truoc-nam-2026-2445470.html

![[Photo] Students of Binh Minh Primary School enjoy the full moon festival, receiving the joys of childhood](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/10/3/8cf8abef22fe4471be400a818912cb85)

![[Photo] Prime Minister Pham Minh Chinh chairs meeting to deploy overcoming consequences of storm No. 10](https://vphoto.vietnam.vn/thumb/1200x675/vietnam/resource/IMAGE/2025/10/3/544f420dcc844463898fcbef46247d16)

Comment (0)